AI

Planning for AI-based Automation Systems

Artificial intelligence, coupled with machine learning, promises to improve plant operations from sensor level to the enterprise, but adoption has been slow with some overzealous starts.

An engineer meticulously performs calibration checks on high-tech manufacturing equipment. With AI-based systems, this function could be accomplished automatically, allowing engineers to spend more time innovating new process controls. Image courtesy of Vilius Kukanauskas from Pixabay

A recent McKinsey article suggests that while many plants could benefit from the use of AI to accelerate process optimization, few are actually ready for it—from equipment on the plant floor to sensors and instrumentation, base-level controls, supervisory/advanced process controls (APC) and finally advisory models. The McKinsey report notes that 10% of plants use AI to describe, predict and inform process decisions.

Only 34% of plants have installed APC systems for critical unit operations, while 75% of plants have instrumentation and sensors in place, but only 70% of these instruments are properly calibrated and cataloged. Only 18% of plants have dedicated IT teams supporting AI solutions, and 60% of organizations have a change management strategy in place to use AI solutions in their current operations.

McKinsey advises manufacturers implement sensor and process upgrades to enable AI deployments. So, what are system integrators (SIs) and automation suppliers doing to help their food processing clients choose automation equipment, sensors and software to build an automation infrastructure that uses AI to make better informed decisions at the supervisory control/APC level?

A Sane Approach to Sensors and Data

The McKinsey report found that manufacturers’ process automation teams “want to install sensors indiscriminately, without a plan to link to value creation.” So, how can food and beverage processors plan for and intelligently increase sensor coverage when they already spend too much time on maintaining and calibrating existing instruments? How should they plan an intelligent sensor network with an eye toward AI-based process control systems?

“Many facilities already have sensors and devices in place that could greatly improve efficiency of their overall process; they just don’t know it,” says Aaron Pfeifer, principal at Atlas OT, a CSIA (Control System Integrators Association) member. “Manufacturers are sold on the buzzword of AI and needing a data lake for it to work, and the only way to build a data lake is with more data. This in turn requires more hardware and software to be sold, draining manufacturers. However, the greatest ROI for low quality product or unplanned downtime can be found in networking existing PLCs and HMIs into a SCADA dashboard or OEE to isolate basic parameters already available.”

“I can understand the desire to install additional sensors,” says Brandon Stiffler, Beckhoff Automation software product manager. “You can’t harness data if you don’t have it, and there are many highly specialized sensors available today. Typically, there are sensors that are essential to the process, and then there are sensors that are ‘nice-to-haves’—be it for process refinement, fail-safe or redundancy control or additional insights for upstream processes, visualization or analytics.”

Instead of blanketing their facilities with sensors, a more effective approach focuses on identifying and monitoring the most critical process variables, says Chris Spray, Lineview customer success director. These are the specific factors, such as flow rates, temperatures and pressures, which directly influence the quality and consistency of the final product. By prioritizing these key parameters, processors can ensure that their sensor network provides the most relevant and actionable data.

“Developing a sensor network geared towards AI-based process control involves careful planning of sensor types, locations and applications that directly link to performance outcomes,” says Gregory Powers, VP digital transformation, Gray Solutions, a CSIA certified member. Sensors should be prioritized based on high-value data and automated calibration to streamline maintenance.

In the context of AI and machine learning (ML), even though more data is almost always better, it is advantageous to make sure all currently available metrics are being exhausted before introducing new hardware, Stiffler adds. Engineering teams should ask some important questions, for example:

- How frequently are we collecting the data?

- What is the resolution?

- Are the relationships between the existing sensors well understood?

- Are we extrapolating all possible features from the data that we do have?

- How does collecting and analyzing this data tie back to actual business priorities?

To begin, teams should inventory key processes, says Thomas Kuckhoff, product manager, core technology, Omron Automation Americas. This is commonly accomplished through value stream mapping with task level granularity. Here the entire factory floor can be involved with internal key performance indicators that accurately [show] baseline performance. It is imperative that teams include a wide range of talent and those especially close to the process itself. Allow operators and manufacturing engineers to bring their years of experience to shaping the “how” the sensor network should roll out to prioritize which processes get the attention first. These team members will be quick to point out tribal knowledge that a sensor is seeking to quantify or validate.

Enlist Outside Help in Planning

For some processors, job responsibilities of certain already overburdened employees may preclude their desired participation in planning activities. When this happens, outside engineering houses and SIs can help.

“It may be best to get the support of some experts experienced in automation and AI-based process control,” says Frank Latino, Festo product manager electric automation. These experts, experienced in data science, will look at the equipment on the machine and probe the food processor for its pain points and learn what needs to be monitored. They can precisely select sensors and locations where they need to be installed based on the actual data required.

“Any automation project without a plan is destined to fail,” says Alex Pool, president of Masked Owl Technologies, a CSIA member. “Spending time on ‘upfront’ planning and requirements gathering is the best way to ensure that resources are being used efficiently on automation. This includes understanding the problem that is being attacked, the desired outcome—and having a detailed implementation plan to stay on course. The short answer—make sure that the problem is understood and that you aren’t fixing a symptom. The key to AI (in this context) is data. As with any decision-making tool, data collection is critical. Having accurate, timely, and quality data will allow smoother transition to AI in the future.”

To effectively expand sensor coverage and plan their usage, start by developing an overall control systems philosophy outline, suggests Jim Vortherms, CRB senior director, control systems integration. This should provide an unbiased assessment of the processor’s current state, its goals and its staff’s capabilities. From this, create a clear plan for managing and installing sensors, as well as utilizing the data they produce. Leveraging instrumentation, sensors and control hardware with built-in diagnostic capabilities enables predictive calibration and maintenance, improving time efficiency and freeing up resources.

When designing a sensor network, organizations should, as the adage goes, “measure twice, cut once,” says Lindsay Hilbelink, global strategic marketing manager at Eurotech, a CSIA member. “By taking time in planning their approach, organizations can optimize data quality, infrastructure scalability and cybersecurity, thereby ensuring a successful solution with long-term value.”

Use a Phased Implementation Plan that is Scalable

To start, teams should set out a phased implementation plan, Hilbelink adds. Rather than attempting to overhaul everything at once, they should start by defining clear improvement goals, and then focus on areas where additional data can create measurable value.

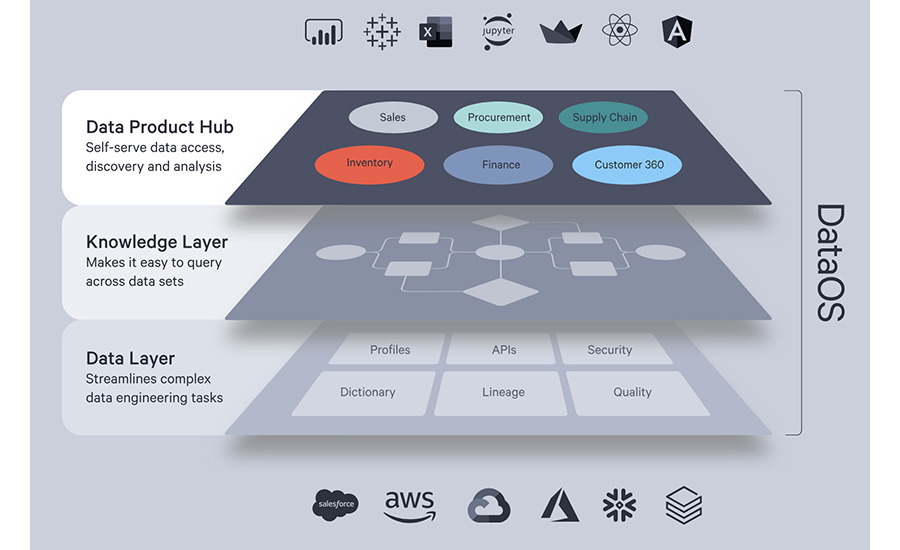

“A phased implementation approach is going to be more effective, starting with pilot areas that can demonstrate clear ROI while validating maintenance requirements before scaling,” says Srujan Akula, CEO and cofounder of The Modern Data Company. “We’ve seen this succeed with a major equipment manufacturer who transformed their sensor data into structured data products, powering AI-driven warranty management and predictive maintenance programs that reduced downtime by 30% and maintenance costs by 25%.”

In a phased implementation plan, once gaps are identified, smart sensors and edge computing can be leveraged to streamline deployment, reduce maintenance and enable real-time AI-based process control, Hilbelink adds. These advanced solutions can help alleviate the burden of maintaining and calibrating existing instruments while paving the way for more sophisticated analytics.

As the sensor network expands, it’s essential to build a scalable data pipeline capable of ingesting and harmonizing information from both legacy systems and new IoT devices, Hilbelink says. This unified data architecture should support real-time data streaming, edge analytics and seamless integration with cloud platforms, laying the groundwork for advanced AI-driven process optimization and predictive maintenance strategies.

AI and its Roles

AI plays a crucial role in the optimization process by enabling the creation of “virtual sensors,” adds Lineview’s Spray. These are essentially AI-powered models that can accurately estimate less critical variables, such as electricity consumption, based on data from existing sensors and historical records. For instance, AI can analyze the operational patterns of various drives and motors to predict overall electricity usage with a high degree of accuracy. This reduces the need for dedicated electricity sensors, minimizing installation and maintenance costs.

To future-proof sensor networks for AI-driven process control, processors should make strategic decisions around return on investment (ROI) for each system, considering both current and future calibration needs, says Eng. Felipe Sabino Costa, senior product marketing manager - networking & cybersecurity, Moxa Americas, Inc., a CSIA partner member. While AI and looped systems can reduce calibration efforts, standard protocols like time-sensitive networking (TSN) are critical for accommodating diverse requirements and ensuring scalability. By adopting TSN, manufacturers can support both real-time control and future data-intensive AI applications. Additionally, estimating future bandwidth needs, which could exceed 10 GB as AI adoption grows, allows for the design of communication paths that can handle increasing data loads and system complexity.

APC and AI to Improve Operations

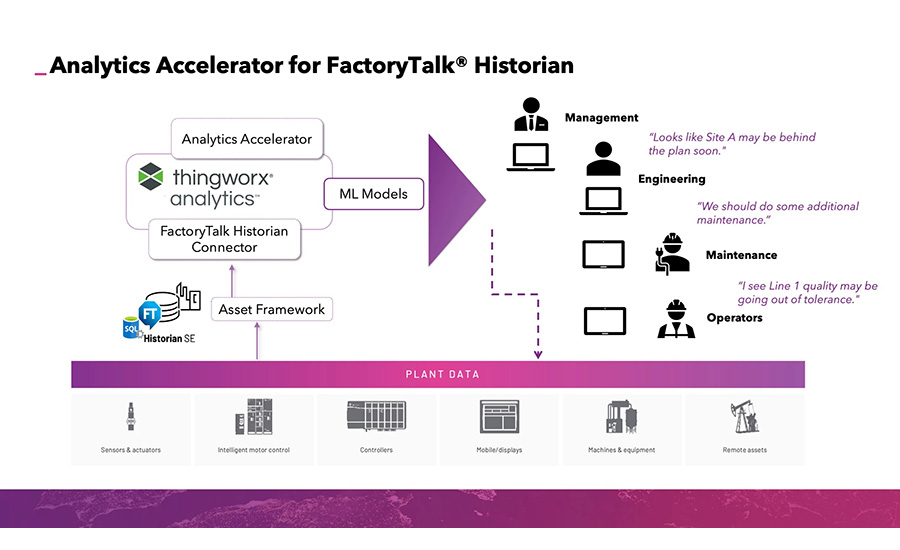

To optimize advanced process controls (APC), processors need robust, well-structured data from sources like SCADA, MES, PLCs and ERP systems, says Gray’s Powers. This data must be cleansed, validated and consistently structured to support accurate APC models. Digital twins and predictive modeling should be used to improve process accuracy and expand APC application across production stages. APC models thrive when regularly optimized through feedback from past performance, helping to extend APC coverage to more processes, including upstream/downstream operations and utilities.

Processors can improve the use of advanced process controls, while keeping an eye on AI-based APC by understanding, choosing, then piloting the right control architecture for their desired outcome, says Omron’s Kuckhoff. Generative AI-based advanced controls, trained on other algorithms or synthetic data, may be best suited for producing unique process outputs—for example, where a novel output is considered a feature and not a bug.

Applied AI-based advanced controls, trained through a vast amount of unstructured data, may be best suited for predicting process outcomes, Kuckhoff adds. An example is where accurate forecasting is critical to meeting output targets. Machine learning, trained through process observation, may be best suited for maintaining current production processes within a tighter allowable threshold where consistency is critical to meeting cost targets.

Even when assisted by AI, APC takes some work in setting it up, according to CRB’s Vortherms. The first step in implementing or expanding the use of APC is conducting a thorough analysis and gaining a solid understanding of the process and the various parameters that impact it. Once the process is fully understood, an analysis can be done to ensure the appropriate sensors are in place to measure the correct variables for feeding into an APC model. Developing and testing multiple APC models in simulation against the process will help determine the most effective model and the correct variables to apply.

Is AI-based APC Ready for Prime-Time?

While AI-based APC solutions are still developing, Powers suggests that AI-driven vision systems are already widely deployed, showing the potential of AI-based tools in food processing operations. (See sidebar, “AI Sorting Process Speeds Up Chicken Nugget Sorting.”)

“They (AI-based APC solutions) are available now,” says Beckhoff’s Stiffler. “For starters, look for controllers that have broad connectivity and data acquisition capabilities. In addition to running the process, they can also share the necessary information with any required external systems for analysis and/or training. If a machine learning (ML) model is identified and trained to refine a specific process, it is possible to execute this model in sync with the real-time control — think of it like a control loop on steroids. Finally, you can continually back feed the results and ‘close the loop’ to further refine the model and improve the process.”

But not all APCs are ready for really tight process control, according to Masked Owl’s Pool. “Adding APCs to production and distribution can reduce safety concerns, improve consistency and quality and consolidate the workforce to ease labor shortage concerns. This can manifest in many ways, depending on the problem. AI-based solutions are fine for data collection, advisory and decision-making support, however, they are not ready to make changes to the real world. For now, that needs to stay within a traditional APC system,” Pool says.

To maximize the potential of APC, food processors must prioritize high-quality data from robust sensor networks and implement effective data management systems, says Lineview’s Spray. Accurate process models, utilizing data-driven techniques and incorporating operator knowledge, are essential for effective control. Employing model predictive control (MPC) allows for proactive adjustments and greater process stability. But, APC/MPC requires a high level of operator training and engagement with people who are comfortable with the technology.

However, AI-based APC solutions are emerging, offering greater adaptability and learning capabilities, Spray adds. “These systems can automatically refine models and optimize control strategies, especially for complex processes. AI can also detect anomalies, providing early warnings to prevent downtime. While still maturing, AI-powered APCs hold immense promise for increasing efficiency and optimizing operations in the food industry.”

To improve the use of APCs and increase their operational coverage, processors can leverage modern software solutions that enhance integration with various hardware platforms, ensuring consistent performance and easier maintenance, says Dave Bader, Eurotech VP of business development. These solutions enable real-time data management, crucial for monitoring and controlling APC systems, thereby enhancing predictive accuracy and responsiveness.

AI Sorting Process Speeds up Chicken Nugget Sorting

In the U.S., frozen chicken nugget sales alone reached $1.1 billion by mid-2023, an 18% increase over the previous year. Within this rise is another emerging trend—a growing demand for “prime” whole chicken breast nuggets, as opposed to formed products.

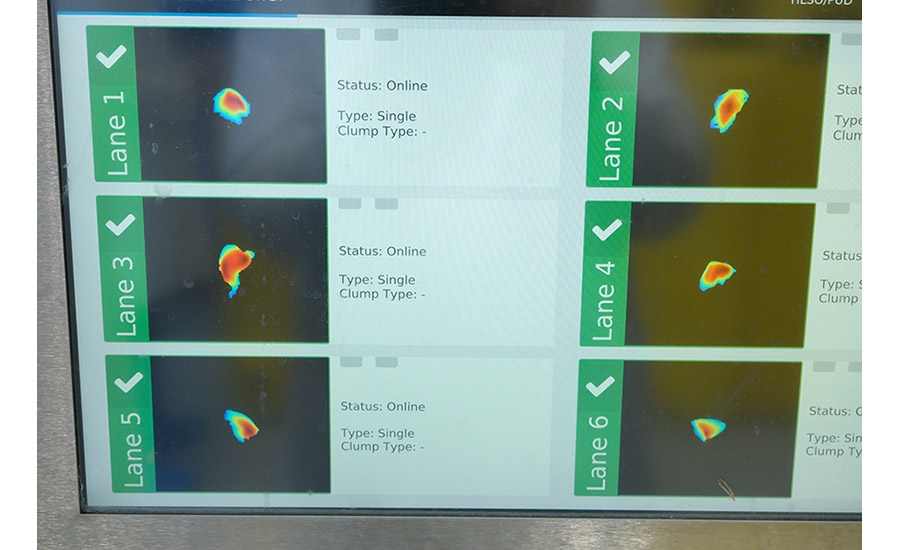

Given the potential losses coupled with increasing demand, poultry processors are turning to high speed, automated sorting systems that singulate, or separate, each piece of chicken so it can be scanned by advanced proprietary vision systems. Nuggets that meet the processor’s specifications in size and shape are then sorted into bins or totes.

The process, which is now enhanced by faster and more accurate AI identification of “prime” nuggets, can sort at speeds of 4,200 pounds per hour and recover most of the lost high-value nuggets currently lost to trim.

With 75 years of experience in shrimp processing and grading equipment, Laitram Machinery identified similarities in the technological requirements, specifically the need to separate and scan each item at high speed. Intrigued by the possibilities, Laitram’s R&D team initiated a project to create a high-speed nugget sorting system several years ago.

The result is the SMART Sorter, a patented, fully automated sorter that separates the nuggets and then utilizes high-precision laser imaging and computer vision algorithms to inspect and sort each piece to the processor’s size, shape and weight specifications.

“We developed a method of singulation at very high speeds that enables us to visually inspect each nugget. By doing that, we get a near-perfect understanding of its size and shape and can recover more prime nuggets,” says James Lapeyre, general manager of Laitram Machinery.

Laitram Machinery recently integrated artificial intelligence (AI) to almost double throughput rates to 6,500-7,000 pounds of nuggets per hour. AI also enhances clump and defect detection, minimizing the risk of “out-of-spec” nuggets reaching customers and further boosting recovery rates.

Lapeyre explains that AI can identify patterns without additional programming. “Over time, the system continues to learn the attributes of a ‘good’ nugget and visually recognizes it. We can also train the system to recognize when there is a clump [two or three potentially good nuggets touching] and how to handle that. In the past, that might have ended up as trim.”

The SMART Sorter also significantly enhances nugget quality, achieving 99% accuracy within specified weight and shape parameters. This precision is crucial, as the retail, foodservice and quick-service restaurant (QSR) sectors frequently require nuggets in diverse sizes and weights. Additionally, chicken strips, also crafted from whole chicken breast, are highly popular and often required in specific dimensions.

AI Advisory Models Help Make Informed Decisions

The question is: How can AI advisory models be used to describe, predict and inform process decisions? “The key is to approach AI implementation strategically, focusing on high-value applications and ensuring proper integration with existing systems and workflows,” Bader says.

The creation and implementation of a digital twin is one of the more effective ways to use a system to describe, predict and inform process decisions, says CRB’s Vortherms. With a digital twin, a processor can test various scenarios to understand outcomes without jeopardizing plant operations, throughput or production capacity.

Automation suppliers play a vital role in helping food processors leverage AI advisory models to inform process decisions, Spray says. They can develop user-friendly interfaces that present AI-generated insights in an easily digestible format, making it simpler for operators to understand and act on recommendations. Real-time optimization suggestions based on both historical and current process data can enhance decision-making significantly.

Tailored AI model solutions that integrate AI into existing process control systems can describe, predict and inform decisions by analyzing historical and real-time data to optimize processes such as energy usage, product quality and equipment maintenance, says Moxa’s Costa. Suppliers should guide processors on when to deploy AI—typically in areas where real-time decision-making and process variability are critical, like temperature control or ingredient mixing. AI models should be employed gradually, starting with predictive maintenance or quality control, and scaling as confidence in AI-driven insights grows. Continuous monitoring and model adjustment are key to long-term success and ensuring sufficient, high-quality (quantity and right type) of data for training the algorithms.

AI or automated solutions only make sense when it comes to safety or if there is a real cost savings, says Masked Owl’s Pool. It’s important to realize that a one-size-fits-all solution will not address unique problems and will lead to failed implementations. AI-based implementations should be driven by process improvements and/or cost savings and mitigating unsafe operating conditions.

Looking for a reprint of this article?

From high-res PDFs to custom plaques, order your copy today!